Ever stared at a screen, bewildered by a jumble of characters that seem to mock your understanding? Then, you've experienced the frustrating world of character encoding issues, a problem that plagues digital communication, but one that can be understood and resolved.

The digital realm relies on a complex system to represent text, images, and other data. At the heart of this system lies character encoding, the method by which computers translate human-readable characters into numerical representations. When these systems fail to align, chaos ensues, manifesting as garbled text, question marks, and symbols that bear little resemblance to the intended message. This article aims to demystify character encoding, providing insights into common issues and solutions to ensure your digital interactions remain clear and comprehensible.

One of the most prevalent character encoding standards is UTF-8, a variable-width character encoding capable of representing a vast array of characters from different languages. UTF-8 is widely used on the internet and is the default encoding for many operating systems and software applications. Its flexibility and compatibility make it a cornerstone of global digital communication.

However, despite its widespread adoption, issues can arise. When a document or website is not correctly encoded in UTF-8, or when a system attempts to interpret a UTF-8 encoded file using a different encoding, such as Latin-1 (ISO-8859-1), the result is often the dreaded mojibake—a string of unintelligible characters. For example, the character ç (latin small letter c with cedilla) might appear as a garbled sequence of symbols.

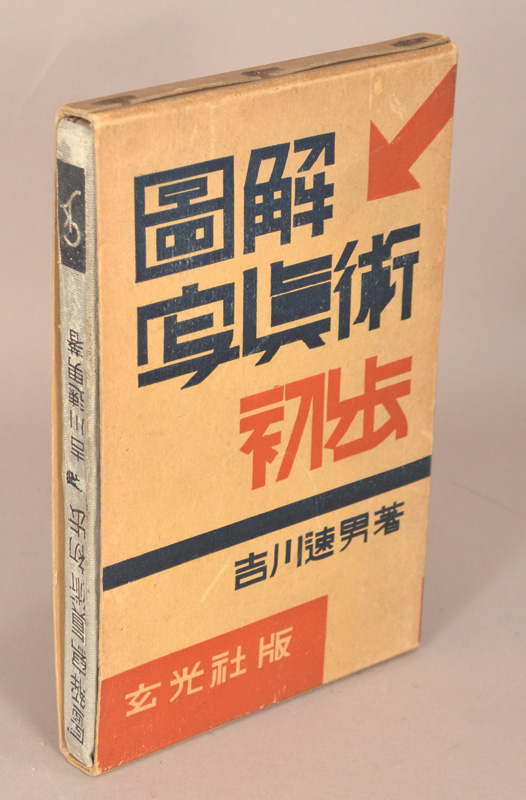

The problems are not limited to English. Languages with characters outside of the basic ASCII set (the foundation for early text encoding) are particularly vulnerable. Chinese, for example, with its extensive character set, relies heavily on UTF-8 to be rendered accurately. When a Chinese text is misinterpreted due to encoding errors, the characters can turn into seemingly random sequences, rendering the text completely unreadable. This is not just a matter of inconvenience; it can severely hamper communication and understanding.

There are tools available to help. When text is corrupted, we can try to find the correct encoding. For example, Python's `ftfy` library is specifically designed to fix common encoding problems, including those arising from the use of incorrect encodings. It can handle a multitude of encoding errors.

Understanding the root causes of character encoding problems is essential. Often, these issues stem from incorrect file encoding settings, mismatched character sets between different software applications, or errors during data transfer. Ensuring that all components involved in handling text—from text editors to web servers—are configured to use the same encoding, primarily UTF-8, is a crucial step in preventing these problems.

Here is a table to illustrate character ranges in Unicode, adapted from the information provided by Alan Wood's Unicode Resources. This table helps in evaluating the coverage of a particular font.

| Character | Code Point | Description |

|---|---|---|

| ç | 231 | ç latin small letter c with cedilla |

| è | 232 | è latin small letter e with grave |

| é | 233 | é latin small letter e with acute |

| ê | 234 | ê latin small letter e with circumflex |

| ë | 235 | ë latin small letter e with diaeresis |

| ì | 236 | ì latin small letter i with grave |

| í | 237 | í latin small letter i with acute |

When dealing with Chinese text or other languages with characters outside the basic ASCII set, the risk of character encoding issues increases. Misinterpretations of character sets lead to garbled text, making it difficult or impossible to comprehend the original message. Proper encoding and decoding techniques, therefore, are critical for maintaining accurate communication.

In some instances, the mojibake can be quite striking. For instance, a seemingly innocuous string of Chinese characters might be converted into a sequence of question marks or other symbols, rendering the text entirely unintelligible. This can arise because the software is not correctly reading the encoding of the text, or attempting to translate it with incorrect values.

Another common issue involves a misunderstanding of how a website or document is configured. When a web page's `Content-Type` meta tag does not accurately specify the encoding, browsers may guess incorrectly, leading to display problems. Similarly, text editors and other applications may misinterpret the encoding of text files, causing the same type of problems.

Tools, like the `ftfy` library, offer a degree of automated correction for common issues. In many cases, these tools can identify the correct encoding and convert the text to a readable format. When these types of utilities are not successful, manual intervention may be required, which could mean researching the original source to determine which encoding was used, or testing various decoding schemes until the text is displayed correctly.

The issue of character encoding transcends language barriers and geographical boundaries. It is a common concern, and its resolution requires a combination of knowledge and practical skills. Mastering the basics of character encoding can make a huge difference to the user's experience.

The character ç, when properly encoded in UTF-8, is easily displayed and understood by a variety of systems. But when rendered with an incorrect encoding, it might become a gibberish representation. Proper understanding and implementation of character encoding is therefore, a key element to ensuring the readability of digital information across devices.

In linguistics, understanding the proper representation of phonetic sounds is crucial. The International Phonetic Alphabet (IPA) provides a standardized way of representing sounds across languages. When we discuss the pronunciation of the sounds in a language, the correct representation is crucial.

In the Serbo-Croatian language, differences in phonetic elements, such as rounding of the lips, can differentiate characters. Understanding the phonetic features helps with accurate speech and pronunciation.

Encoding issues can manifest in many forms. When working with text analysis, particularly when dealing with data from different sources, incorrect or mixed encodings can cause significant problems. Libraries and tools such as ftfy, which fix text for you, are useful when trying to correct encoding problems.

Unicode offers a comprehensive character set that includes not just common letters and numbers but also symbols and characters from various languages. The ability to handle such a vast range of characters is critical in a globally connected world.

The Unicode Standard, with its several versions, has become the bedrock of encoding. When working with specialized characters, ensuring the correct character is chosen is vital. Characters can be identified by their specific code points, allowing for precision in representation.

For instance, Nushu characters, which have a unique history and context, are correctly represented via Unicode code points. The ability to render and work with these characters depends on appropriate encoding choices, which allows for the preservation of this linguistic and cultural information.

Character encoding is more than a technical detail; it is a gatekeeper of understanding and communication. It allows for the proper transfer of data and information across the digital landscape. The correct application of encoding principles is essential for effective communication.